I thought a wee article on what a server is and why they are expensive compared to your average PC might be interesting. When you hear someone say they have built a server for the house what they usually mean to say is they’ve built a PC and have it running various applications which serves ‘stuff’. A real server is a different beast all together.

So what makes a server a server? It boils down to the hardware feature set. Sure, you can get low-end servers which are nothing more than a PC in a fancy rack mount chassis, but lets take a look at higher end goodness.

1. The Chassis

Servers are generally built in 19″ rack mounted chassis. This comes down to space efficiency and convenience. Floor space is always a limiting factor in data centres and being able to stack ’em high is advantageous for many reasons (cooling, cabling, density, connivance of install/removal etc). Measured in Rack Units (1U = 4.5cm), a typical rack may be 42U tall and therefore, in theory at least, be able to hold 42 servers. In practice you are usually limited by power and cooling limitations, not to mention the cabling the networking requirements of so many machines. That said a rack is a highly desirable method for housing servers and other related equipment.

A single 1U server has to pack a lot of electronics in quite a small space, therefore, PSUs are custom, the CPU/chassis cooling is custom, motherboard, hard drive caddies etc. In other words these high density machines incur higher design and manufacturing costs than your average floor standing machine.

Rack mount servers are usually mounted on rails which allow them to slide in and out of the rack with ease. These rails have to be strong enough to hold the weight of the server when the rails are in a fully out reached position.

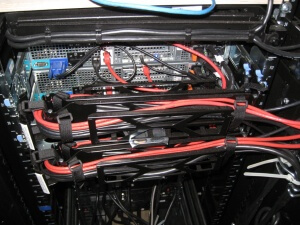

Some even have cable management arms which allow you to pull the machine out without having to unplug any cables from the back (the cables are long enough to snake around the arm which extends straight as the server is pulled forwards). As you can imagine this extra bulk of metal doesn’t come cheap either.

Typical Cable Management Arm.

2. Hot Plugging

One of the major advantages of server hardware is the ability to add & remove various devices without powering the machine down or taking the chassis apart. Not all servers allow hot swapping but most, at the very least, allow disks to be changed in and out using what’s known as a disk caddy.

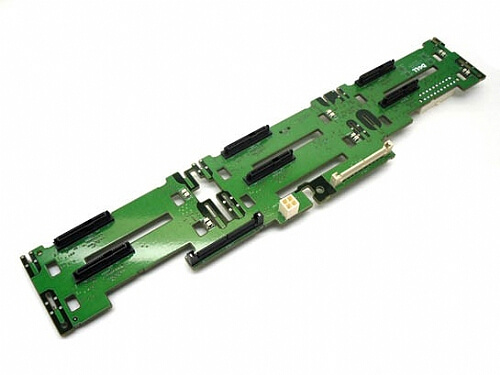

In order for hot swapping disks like this you need an active or passive backplane. This backplane provides the data and power connectors for each disk and often have their own firmware. In addition to being able to add and remove disks whenever you like a server also usually comes with a hardware RAID controller for redundancy and performance reasons. A RAID controller often has fair chunk of onboard processing and memory as well as a backup battery.

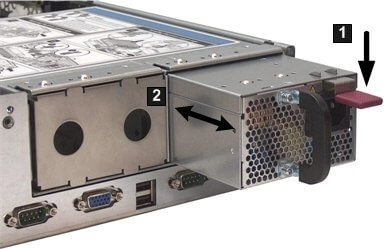

Another important hot pluggable device is the PSU, or rather PSUs. Most higher end servers come with two power supplies, both of which can be replaced while the server is running. Having two power supplies allows you not only be able to replace one if goes faulty but also allows the server to be fed from independent power sources without the need for an external automatic transfer switch.

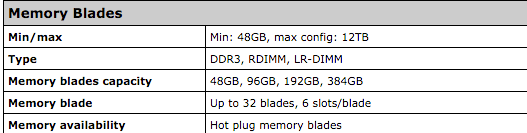

If we look at even higher end server kit we get into the realm of hot plug memory and I/O. For example the beast that is the Bullion S8 server allows RAM to be hot swapped from the front of the chassis. If you think about that for a second you will realise what such an architecture would need to involve. Not only can you hot swap RAM but also general purpose I/O cards, such as Network Interface Cards.

3. Management & Remote Access

One of the biggest headaches faced by having servers hosted in a datacenter a million miles away from your office is how to monitor, manage and access them remotely. Thankfully there are several really useful ways to help with this.

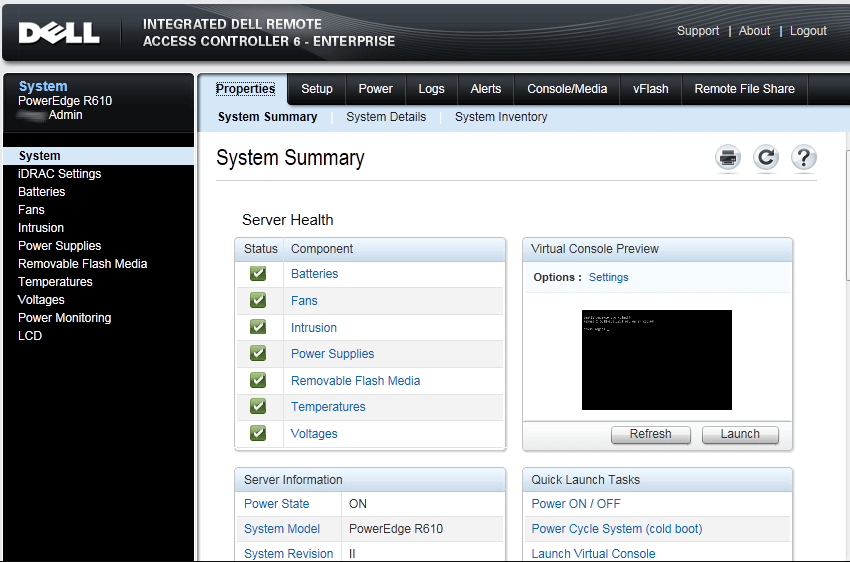

Lets be clear by access remotely I mean access the underlying hardware, not the OS – that’s what SSH, RDP etc is for. In order to emulate hardware level access you need ‘console’ access – the same as having a physical monitor and keyboard plugged into the server. There are external ways to achieve this, such as a KVM over IP unit but most manufacturers have this built in. iDRAC for Dell, iLO for HP, some IPMI thing for Supermicro etc. Not only do they allow you console access but also general status & health reporting of the hardware.

As you can see in the iDRAC example above you have a web interface providing access to all sorts of hardware monitoring and virtual console access as well at neat stuff like being able to blink the LED on a particular server or disk in that server. It’s little things like that which make life so much easier when working with remote datacenter staff.

Servers also often have LCD displays showing their current status, such as errors with PSUs or disks etc.

4. CPU & RAM

When you think of server CPUs you probably think of Xeons. These CPUs are made by Intel and have been the workhorses of servers for decades. In the world of x86 the only other real alternative is AMD’s Opteron line. To be honest you like likely find an equally fast Opteron as you will a Xeon and the determining factor of ‘which is better’ is likely either price or some specific performance metric for whatever application you want to run on them.

Sticking to Xeon, what makes it faster, better, more expensive than your typical desktop CPU? Shit loads of grunt and plenty of L1/L2 cache. Most server CPUs also have many more processing cores than your average desktop, as well as a more comprehensive instruction set.

Take the Intel Xeon E5-2699 v3 as an example – it has 18 physical cores and 45MB of cache, this gives a total of 36 threads per socket (with HT). When you consider that server motherboards often support multiple CPUs, say 4 for example, you all of a sudden have a server with 72 cores. Compare that to your typical 4 or 8 core desktop and you’ll understand why they come in at a hefty £3,500 per CPU.

In case you have never seen a multiple CPU motherboard they look something like this, note how each CPU has a bank of RAM slots physically located near each socket.

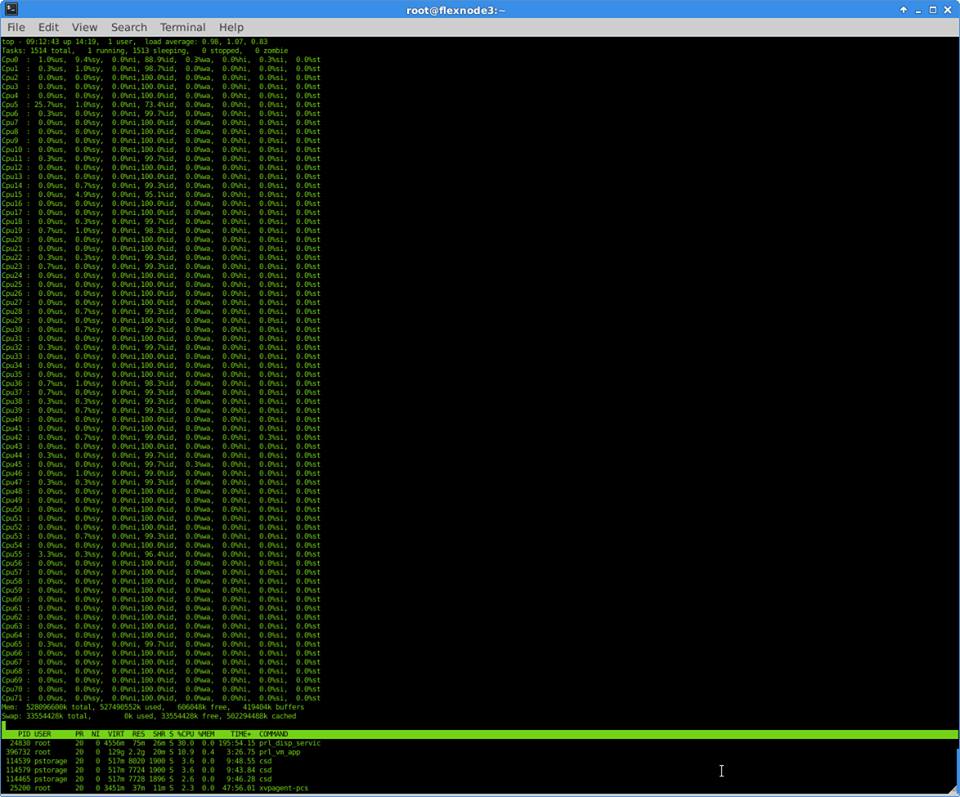

This is how 72 cores looks in the output of ‘top’ (oh yeah and a casual 512GB of RAM).

Another important difference is with RAM (or memory, whatever you want to call it). Server architecture usually requires ECC memory. Error-correcting code memory is used because it can ‘fix’ internal data corruption on-the-fly, again this is more expensive than normal memory but provides increased stability and performance. When data is stored in memory there is an extra bit for every stored ‘word’. This extra bit is called the parity bit and is the result of a calculation performed on the rest of the word. The problem is it can only detect errors, which is useful, but not as useful as being able to correct the error – ECC can do both. This isn’t wikipedia so if you want to know how it does this, click here.

As well as stable memory a server often requires LOTS of memory. When I say lots I mean lots. In comparison to your average desktop machine, which as of 2016 may have say 16 or 32GB RAM, a server may have 512GB (see the output of ‘top’ above) or, in the case of the Bullion S8 range, as much as 12TB. Yes, twelve-terra-bytes.

Of course, 12TB of RAM isn’t ‘normal’ by any means, the point is server architecture is designed for expandability, therefore the ability to add additional disks, more RAM, another CPU etc is all part of the design.

5. Disks

We touched on disks under hot-plugging but there’s more to be said here. Traditionally in desktops you had IDE interfaces for disks and in servers it would be SCSI. These days it’s SATA for desktops and SAS for servers (also near-line SAS).

Like SCSI was, SAS (Serial Attached SCSI) is designed for servers. SAS disks are more expensive than SATA and come in slightly different capacities. The main difference is spindle speed – the rotational speed of the platters inside the drive. SATA is usually 7.2k RPM while SAS is up to 15k RPM. This allows for faster seek times, up to twice as quick.

SAS disks also have higher mean time between failures meaning they are regarded as more reliable, or rather, they have a longer life in terms of operational time. This is obviously advantageous given servers are usually on 24/7.

SAS isn’t just about physical differences though, the SAS protocol is very different to SATA. I’m not going in depth here but in short features such as multipath I/O, multiple initiators, longer cable support (higher voltage), the bus is full duplex (SATA is half), the command set has more functionality (error reporting, recovery, block reclamation etc).

Of course these days SSDs are becoming far more popular. Although an SSD fundamentally has a limited number of writes (unlike spinning rust, which theoretically has no limit), they are finding their place in the server world. A typical use for an SSD might be as a read/write-cache disk in a SAN or a pair of them in RAID X for the OS.

Server grade SSDs are, as expected, far more expensive than consumer grade drives. They come in various sizes and biases – e.g. a write-bias SSD will be selected for more write intensive operations. The main differences are in their erase endurance and the NAND flash memory program used (as well as management tools and interfaces, such as SAS).

Spinning rust will likely remain the preferred choice where high capacity is required but SSDs certainly have their place in servers and SAN solutions. There will come a point when SSDs can rival spinning rust in terms of longevity and value but for now their application in servers is limited albeit definitely progressing.

As well as high throughput, low latency disks, server chassis often hold lots of disks. The more disks a chassis can hold the lower the total cost of storage is. Therefore you often find chassis which can hold 12 or more disks. These days most server disks are 2.5″ as opposed to 3.5″ which helps to increase the overall density.

6. Network Interfaces

Given the vast majority of servers are part of an Ethernet network, they need fast network interfaces and usually more than one. Server spec NICs often come with on board processing, such as a TCP offload engine and are available as PCI cards with as many as 4 ports per card (a.k.a. quad port NIC).

A desktop NIC is likely 1Gbps (gigabit ethernet), whereas server NICs these days are often 10Gbps – that’s ten times the speed. Of course for 10gigE you also need a 10gigE switch, cat6 cabling etc.

The reason for many multiple NICs isn’t just for throughput, it’s often for redundancy. It is common to have pairs of NICs in ‘bonds’ to either protect from NIC failure, or more likely switch failure. There are different bonding modes but active/passive and LACP are probably the most common.

Other features of server NICs include on board PXE support (for booting an OS over the network), TCP offload, VLAN tagging in hardware, large MTU support and direct I/O (such as Intel’s DDIO technology) and the SR-IOV PCIe extension.

Of course when you think server NIC you may also think of fibre, so here is a picture of a lovely 10gbit fibre NIC just for you.

Another popular alternative is Infiniband, with Intel and Mellanox being the big brands for adapters. Most implementations use a 4 x 10gbit aggregation, giving 40gbps bandwidth per link. Infiniband is more expensive than traditional Ethernet, however, performance wise it can pay for itself if used for the right applications. One of the most popular uses is for SAN switching fabrics due to its low latency and high throughput capabilities.

Here’s what an Infiniband connector looks like:

To give you an idea of comparison, a 10m cat5e cable costs around £5 whereas a 10m HPE Infiniband cable is around £250.

That’s a quick summary of why a desktop is not a server and why servers are so expensive in comparison. In case you wondered what the green server was at the top of the page it’s a Bullion S8. They come in at around $55,000 for the two CPU socket / 512GB RAM variant. If you want one, check out http://www.bull.com/bullion-servers